My research for Novel 2.0 has taken me down some colorful paths, but none more bizarre than a 2006 book by David Levy called Love and Sex With Robots: The Evolution of Human-Robot Relationships. In some ways, this topic is an extension of previous reading, especially The Singularity Is Near (2005) by Ray Kurzweil, an influence on my previous novel. Kurzweil is a software engineer and computer trend prognosticator who believes artificial intelligence will surpass human intelligence some time around 2045, an event he calls the Singularity. It's a paradigm shift on a global scale, which means we can't see past it to its ramifications. Of course, always in motion is the future, and prophets tend to look foolish only a few years down the road. But Kurzweil's been making predictions for decades, and at least until now, they've been remarkably accurate. I think the most conservative possible view save one is that he's off by only a decade or two at most.

How could this be? Surely human consciousness is a magical state far beyond the capacity of any computer? To a person of faith, all intelligence is artificial intelligence, in that it was created and imbued by divine spirit. When such a person defends his or her beliefs in the light of all scientific data that contradicts them, he or she often points to two grand cosmic mysteries as indisputable evidence. First, the universe is so majestic that it could not have come to be without help. Second, life is so intricate, and the human mind so specifically complex, that it could not have evolved through merely physical processes. These arguments are not without merit. I'm swayed by them myself. But consider: What if we were to invent a computer with all the speed, power, memory, and basic knowledge (call it software) of the human brain and turn it on? What would happen? Would it talk like us, think like us, perhaps even love like us? Would it be artificially aware, or just intelligent? Sure, it could do difficult square roots in the blink of an eye. It might understand or even create knock-knock jokes. But would they be funny? Could it write an affecting sonnet on the subject of beauty? Could it write a satirical novel about what fools these mortal users be? What's the difference, fundamentally, between us and the equivalent computer? Would it be intelligent, even emotional, but in an alien way? Or would it be the "person" we instructed it to be?

First things first. How close are we, really, to building such a machine? The human brain is the single most complex volume of real estate in the known universe. It holds terabytes (trillions of bytes, or thousands of gigabytes) of memory and runs at somewhere around ten teraflops (trillions of operations per second), yet requires only 24 watts of energy a day. Even subtracting value for the high failure rate of human memory, that's still enough to dwarf any silicon computer ever made, in a fraction of the volume. Using current technology, any computer in the world would overheat in the blink of an eye if we tried to run it at the speed of a human brain. Impressive, right? Score one for the Maker.

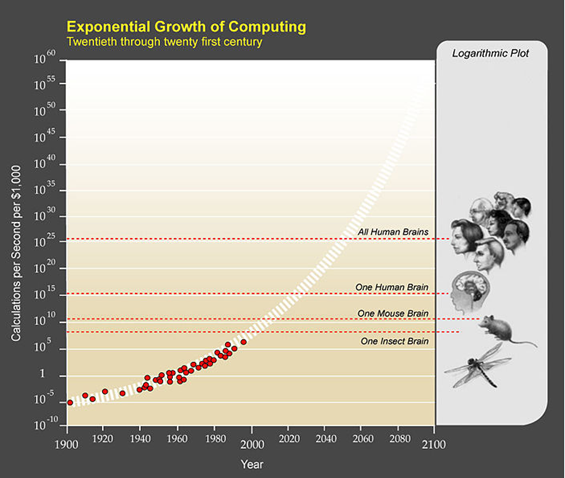

But wait! IBM is building a computer called SuperMUC for the Bavarian Academy of Science that will come online in 2012 and run at a maximum of three petaflops. Of course, that's less than a third of human brain speed, but it's clear we've come a long way in less than a century of computational engineering. Check it out:

This oft-cited graph, created by Hans Moravec in 2002, seems to show a nice steady climb toward artificial intelligence. A closer look reveals the graph is actually logarithmic, a structure that compresses steep exponential curves into straight lines. Applicable here is "Moore's law," a computer engineering principle that can be grossly oversimplified to say computer power doubles every 18-24 months. That's not a flat geometric line, it's a skyrocketing curve into infinity. If you're wondering how far we've come since 2002, the Intel Core i7 Extreme 965 CPU costs about $1000 (in equivalent dollars, that is) but sits above the "1995 Trend" projection. We're doing better than expected, in other words, symptomatic of an exponential curve. Here's another useful graph for comparison:

That's still a logarithmic graph, but it projects its curve into the future and compares it to four key brain-related markers (in terms of pure calculations per second). On that predictive graph, a computer worth $1000 in present-day value will reach the level of human intelligence in the 2020s. The same money could buy you a computer as "intelligent" as the entire human race by 2060. Yikes!

Ah, but let's not grab our torches and mob the Microsoft campus just yet. The fact is these graphs contain plenty of wiggle room, and there's more to intelligence than processing speed or memory. But we are on that path. For a while there, it looked as if the overheating problem might put the kibosh to Moore's law in the next few years, but an IBM project called silicon nanophotonics has found a detour around it. I won't bore you (or myself) with the details, but suffice it to say it reduces overheating significantly and opens a new door to progress. This year saw the beginning of an international "Exascale" project to build a computer that can run at a quintillion (10 to the 18th power) operations per second, or a hundred times faster than a human brain, by 2018. You know: eight years from now. The Internet already contains more information than any organic brain ever could, so a simple wi-fi connection gets around the memory hurdle. Since 1984, the Cyc [1] (short for "encyclopedia") Knowledge Base has been compiling a list of "common sense" factoids a computer must know before it can converse on a variety of subjects with a human being. But according to the famous Turing test, if a human exchanging text messages with a computer can't tell he or she is conversing with a computer, then guess what--that computer is artificially intelligent.

Now, think about that. When you and I write to each other, I accept that you're an intelligent person, a mind, because you know what I know about life, you understand what I say, and you respond logically in real time. I'm not privy to all your internal mental meanderings, what theologians and philosophers call your "soul." In practical terms, I don't need to hear or read any of that stuff before I can accept your validity as a person of intelligence. I just need the outer results of such internal processes; you behave like a person so I believe you're a person. Heck, most pet owners don't even need that much.

Do we each have a "soul" per se, or are our thoughts and emotions the output of an amazing biological computer? Is there a ghost in that machine? Until now, any definition of the soul was purely mystical. We might even say it's defined by necessity, in that it fills open plot holes: The soul is whatever a human being (plus maybe animals, especially the cute furry ones) can think or feel but which cannot be generated by human biochemical processes alone.

If we build a supercomputer as capable, in both hardware and software terms, as a human brain, and we turn it on, and it seems to reason and have emotions and plan the way we do, we must then accept that computer as intelligent. Even if we know it has no soul beyond the physics, we cannot argue that such a magical property is required before a being can think. And if the computer behaves as if it feels emotions (for example, if it behaves as if it cares about us), we must then accept it has an "emotional life." Philosophers and other spiritual thinkers may debate whether emotions and "emotional" behavior are the same thing, but they'll be arguing semantics. The practical results will be the same. After all, I don't know my girlfriend really loves me. Some days she may not. But she acts as if she does, and that's the best I can reasonably hope for.

We live in an amazing time, when one of the key hypotheses proposed by religion, that only God can make a "soul," is about to be decided once and for all. I think it's the most important claim made by religion. It proposes a trans-Newtonian, even trans-quantum, place in the universe: the human mind. Such a spiritual soul would indeed be miraculous and could not have evolved. But if an Exascale computer with all the knowledge in Cyc and the memory of a cloud network at its disposal can't write original haiku, then the philosophers were right: We are metaphysical. If not, well...

If we discover a soul is unnecessary to explain human consciousness, and no physical evidence for a soul is ever found--after all, it never has been before--then surely we can conclude human beings don't have souls? And if we don't have souls, then mustn't it logically follow that there is no possibility of an afterlife? And if we have no afterlife, then mustn't it mean every religion ever devised is pure hokum? And if that were, in fact, true, would we really want to know it?

Clearly, these are earth-shattering questions, but that's not the half of it. Given the existence in my lifetime of a computer as smart as any human being, we'll have far more practical matters to consider; and despite decades of warning, they'll probably catch us with our pants down. Perhaps even literally: Will human beings have sexual relations, dare we say relationships, with artificial intelligences? I love my computer, but one day, will I love my computer? Will it love me, or just "love" me, and will I care about the difference? Or what if it doesn't love me at all--not because it can't love anyone, but because it hates me personally? Talk about rejection!

If I have sex with an artificial intelligence, am I cheating on my human relationship? What if my fembot and I claim to love each other?

What if my intelligent computer decides not to work for me? Is it right to require devotion and labor from another intelligence for free, or is that a pretty fair definition of slavery?

What if an intelligent computer wants the vote? What if that computer is as wise, or at least as intelligent, as an entire culture put together? Should it get a culture's worth of votes? Is emotion a strength or a liability in a voting booth?

Large parts of the country are still squeamish about certain kinds of human marriages. What about humans and computers who wish to tie the knot? It's possible to imagine adoptive parents who exist "only" as artificial intelligences manifesting through some virtual reality incarnation. The mind reels.

I know it sounds like science fiction, and at this point, it is. But it isn't fantasy. It's based on actual research and development, happening now, and it will almost certainly come to pass in the next forty years...

...if, that is, it doesn't take more than hardware and software to make a person. Things are about to get interesting. Call me crazy, but I'm looking forward to it.